Everyone is suppose to get an EV now, because apparently switching to electric cars will help save us from the extreme’s of climate change. Hint – it won’t, at least not on its own (ever wondered how much GHG a cruise ship emits?). There are plenty of problems with EV’s: expensive, questionable provenance of materials, too heavy, short lifespan, short range, fire potential (just look at the two car carriers that have caught fire in the past year) etc. But there are other issues as well, namely the code used to run the cars.

Now most new cars have a good bit of code in them, used to run much of the car, to the point where you need to plug the car into an analysis system to determine what’s wrong. They will get even more of code once autonomous systems become more common (will they? … they don’t seem that robust at the moment… and you certainly can’t compare them to autonomous trains because they drive on tracks). A modern car runs on roughly 100 million lines of code (LOC), which is a lot of code for a vehicle that doesn’t do anything out-of the ordinary (I mean a human still has to drive it). An EV supposedly has up to 150 million LOC, and an autonomous vehicle supposedly has 1 billion LOC running it. To put that into perspective Apollo 11 used 145,000 LOC, written in AGC assembly (you can look at the code here if you like). Sure autonomous vehicles likely have to do a lot more, and have a lot more sensors feeds, but 1,000,000,000 LOC is a lot.

A lot considering the bug-plagued F-35 uses 8 million LOC to run the jet (everything from flight controls to fusing together sensor data), and another 24 million LOC to run the maintenance and logistics software. So, what exactly is happening with car software? Now 100M LOC is likely the same amount found in an EV, because systems have a different type of complexity (managing electric motors and batteries etc, versus more complex gas engines). But that’s still a lot of code to do nothing autonomously. Why is there so much more code in a car versus a jet plane? It certainly can’t be all the combat systems in a car.

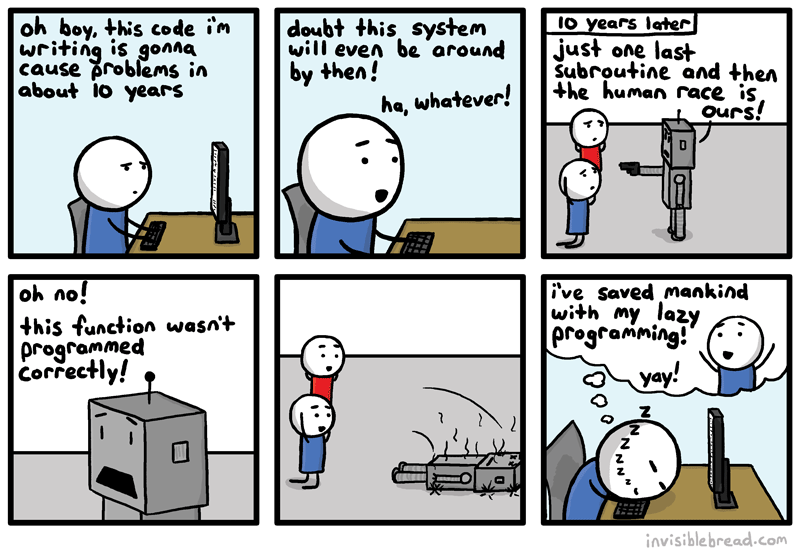

The only thing to explain this might be sub-par programming. Unlike planes, which aircraft manufacturers prefer to keep in the air, cars are a totally different animal. There are usually fewer aircraft incidents than car crashes because there is a higher quality associated with aircraft software. But if we set aside autonomous vehicles, and only look at manual-drive gas and electric vehicles, it still begs the question – why so much software? it’s just running the engine and a few ancillary systems… nothing special really. Most of the code for these systems is supposedly written in C and C++ (with a sprinkling of assembler, Ada, Fortran), so perhaps some of the programmers are just writing sub-standard code? I mean C is a pretty no-nonsense language known for systems development… why would you need 100 million LOC to run a car? Perhaps the people doing the programming lack experience in embedded systems programming?

EV software is even more critical because unlike gas-powered cars, where the software basically runs the engine and ancillary systems, there is much more of a safety issue. EVs require a battery management system (BMS) to keep the EV’s battery functioning properly, correcting issues or isolating malfunctioning battery cells immediately. Poor software can have much dire consequences in a car with a 400-800 volt electrical system tied to a heavy battery system (anywhere from 300-800kg). So apart from the battery (which in my opinion is the biggest red flag system in an EV), the next most important system is the software. There is no mechanical system or engine, which basically means the software, all 150M lines of it, runs the car.

I would strongly question why so much code is needed, and how much testing is actually performed on these systems. Will EVs become like so many artifacts of the software world where the user does the testing by driving the car, similar to how user-based testing works with smartphones and the like? How many software upgrades will have to be performed? And of course how cyber safe are these cars? Lot’s of questions, but like many things in the world of software, not many answers.

Did you find this interesting? You might like: Are autonomous vehicles that important?